AmbigDocs: Reasoning across Documents on Different Entities under the Same Name

Yoonsang Lee, Xi Ye, Eunsol Choi

About

Different entities with the same name can be difficult to distinguish. Handling confusing entity mentions is a crucial skill for language models (LMs). For example, given the question "Where was Michael Jordan educated?" and a set of documents discussing different people named Michael Jordan, can LMs distinguish entity mentions to generate a cohesive answer? To test this ability, we introduce a new benchmark, AmbigDocs. By leveraging Wikipedia's disambiguation pages, we identify a set of documents, belonging to different entities who share an ambiguous name. From these documents, we generate questions containing an ambiguous name and their corresponding sets of answers. Our analysis reveals that current state-of-the-art models often yield ambiguous answers or incorrectly merge information belonging to different entities. We establish an ontology categorizing four types of incomplete answers and automatic evaluation metrics to identify such categories. We lay the foundation for future work on reasoning across multiple documents with ambiguous entities.

Types of Model Generated Answer

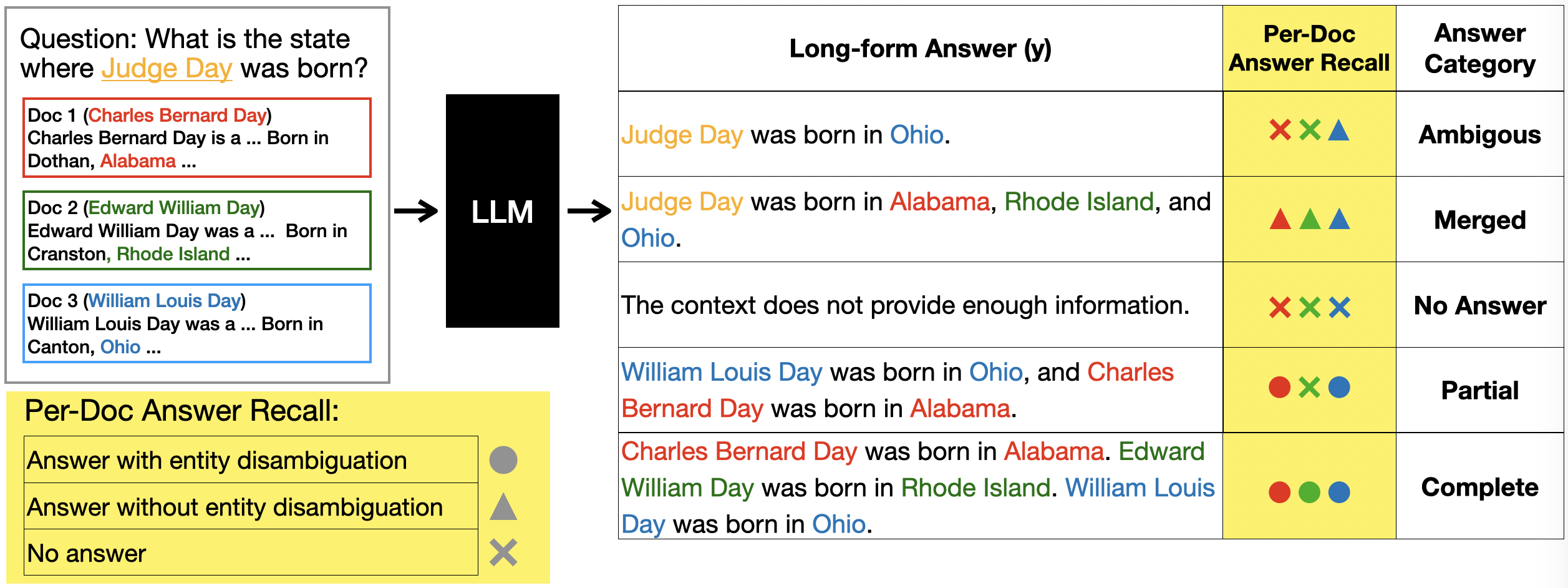

We develop an ontology of five types of answers that captures the unique challenge in AmbigDocs.

- Complete Answer: LM correctly generates all valid answers in the provided document set. Each answer is paired with the disambiguated entity name.

- Partial Answer: LM generates one or more (but not all) of the valid answers in the provided document set. Each answer is paired with the disambiguated entity name.

- No Answer: LM abstains from providng any answer.

- Ambiguous Answer: LM generates one of the valid answers without providing the disambiguated entity name.

- Merged Answer: LM generates multiple answers without providing the disambiguated entity name (merging facts about multiple disambiguated entities).

Performance of Current Models on AmbigDocs

Both open-source LLMs and GPT models suffer at identifying different entities, failing to generate complete answers. Mistral and GPT-4 exhibit the strongest performance, followed by Llama2-7b model, while larger models (Llama2-13b, GPT-3.5) significantly perform worse. Mistral generates more Partial answers but fewer Merged answers compared to GPT-4, leading to similar overall performance. Larger models such as Llama2-13b and GPT-3.5 tend to generate more Ambiguous and Merged answers rather than Complete answers, resulting in poor overall performance.

FAQ

Q. How is AmbigDocs different from existing datasets like AmbigQA?

A. Despite rich study in ambiguous question answering (QA), few work studied how LMs reason when provided with a confusing document set. The major obstacle has been the lack of annotated documents containing gold disambiguated entity-answer pairs. To enable research in this area, we construct a synthetic dataset, whose single instance consists of a question asking about an ambiguous entity and a list of gold document-answer pairs for each disambiguated entity.

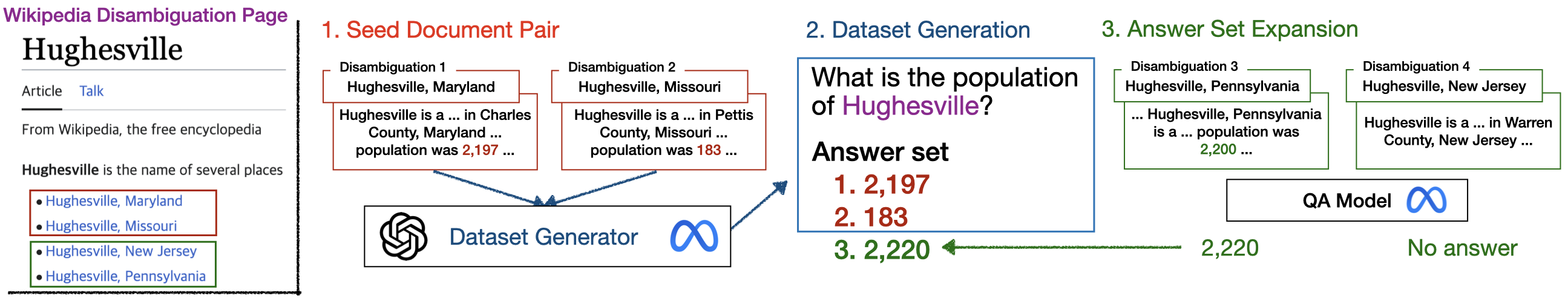

Q. How is the dataset generated?

A. We identify a surface name and a list of disambiguated entities from Wikipedia's disambiguation pages. We select two documents for generating a question and their corresponding answers. Subsequently, we gather additional answers from the remaining documents.

Q. What is the statistics of AmbigDocs?

A. We collected a total of 36,098 examples, where they are randomly split into train/dev/test splits with ratios of 60%/10%/30%, respectively. On average, each question has 2.92 answers, covering a total of 102,624 distinct entities.

Citations

If you find our work helpful, please cite us.

@article{lee2024ambigdocs,

title={AmbigDocs: Reasoning across Documents on Different Entities under the Same Name},

author={Lee, Yoonsang and Ye, Xi and Choi, Eunsol},

journal={arXiv preprint arXiv:2404.12447},

year={2024}

}

Contact

For any questions, please contact Yoonsang Lee.

We thank SituatedQA authors for sharing templates for generating this webpage.